I just read the book High Performance Web Sites - which is an excellent

O'Reilly book about how to improve the performance of your website by

focusing on the frontend. This book gives 14 weighted rules to follow

that will improve the load time of your web page. Reducing the number

of HTTP requests, gzipping components, adding far-in-the-future expire

headers all lead to pages that load much faster.

I just read the book High Performance Web Sites - which is an excellent

O'Reilly book about how to improve the performance of your website by

focusing on the frontend. This book gives 14 weighted rules to follow

that will improve the load time of your web page. Reducing the number

of HTTP requests, gzipping components, adding far-in-the-future expire

headers all lead to pages that load much faster.

The author also describes a tool called YSlow that measures a number

of performance aspects of your page. It reports the total load time,

the page weight, a detail of component loading times, as well as an

overall score that indicates how well optimized a page is. A score of

A means that the website is doing all it can to eek out performance,

while a score of F means that there is plenty of room for improvement.

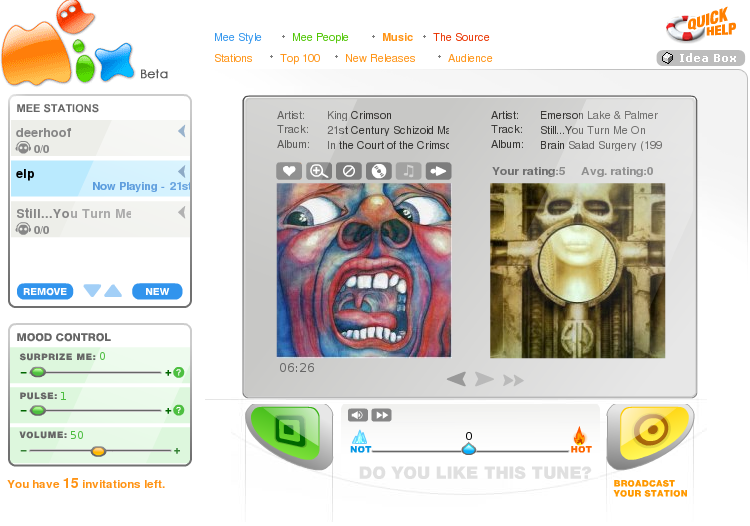

I applied this tool to 50 well known Music 2.0 sites and recorded the

front page load time, the YSlow score, the page weight (the amount of

data downloaded) and the total number of http requests needed to

download the page. As you can see from the table, Music 2.0 sites have

much room for improvement. The average load time for a Music 2.0 page

is 6.6 seconds, Ruckus was the worst with a load time of over 30

seconds. Ruckus also has the lowest YSlow score of 30, showing that

there are lots of things Ruckus can do to improve its page loading

time. According to YSLow, for starters, Ruckus could combine its 23

javascript files into one, saving 22 very expensive HTTP requests.

Note that flash-heavy sites like Musicovery, MusicLens appear to do

well here, but they've just shifted the loading times into a flash app

that is not measured by YSlow.

| name | load time

(secs) |

| score |

|

|

| Page

Weight (K) | HTTP

Requests

|

|---|

| Amazonmp3 | 4.722 |

| D (65) |

|

|

| 325.3 | 57

|

| Aol music | 7.6 |

| F (36) |

|

|

| 306.9 | 91

|

| All music guide | 12.013 |

| F (37) |

|

|

| 479.2 | 113

|

| Amie street | 3.291 |

| F (39) |

|

|

| 576.3 | 51

|

| Artistserver | 5.537 |

| F (44) |

|

|

| 173.3 | 39

|

| blogs.sun.com | 2.4 |

| F (58) |

|

|

| 103.3 | 22

|

| Cruxy | 3.491 |

| F (53) |

|

|

| 707.3 | 43

|

| Facebook | 6.45 |

| F (43) |

|

|

| 197 | 110

|

| Finetune | 10.6 |

| F (53) |

|

|

| 129.6 | 22

|

| Goombah | 5.269 |

| F (49) |

|

|

| 185.2 | 33

|

| Grabb.it | 3.398 |

| D (67) |

|

|

| 77.4 | 32

|

| Grooveshark | 2.303 |

| D (60) |

|

|

| 254.4 | 30

|

| Haystack | 5.796 |

| F (50) |

|

|

| 495.7 | 71

|

| iLike | 4.71 |

| D (62) |

|

|

| 190.8 | 24

|

| Lala | 5.548 |

| B (83) |

|

|

| 33.8K | 19

|

| Last.fm | 3.544 |

| C (71) |

|

|

| 408.3 | 59

|

| MP3 Realm | .802 |

| B (83) |

|

|

| 18.3k | 7

|

| Midomi | 29.872 |

| D (63) |

|

|

| 238 | 34

|

| Mog | 14.712 |

| B (80) |

|

|

| 293.3 | 53

|

| Mp3tunes | 10.886 |

| F (56) |

|

|

| 439.9 | 45

|

| MusicBrainz | 7.8 |

| F (44) |

|

|

| 369 | 35

|

| MusicIP | 1.517 |

| C (72) |

|

|

| 80.3 | 11

|

| MusicLens | .965 |

| A (96) |

|

|

| 5 | 2

|

| MusicMobs | 2.372 |

| D (62) |

|

|

| 161.4 | 31

|

| music.of.interest | 2.239 |

| C (79) |

|

|

| 105.2 | 7

|

| Musicovery | 1.315 |

| A (92) |

|

|

| 144.6 | 5

|

| MyStrands | 5.463 |

| F (55) |

|

|

| 222.5 | 33

|

| Napster | 3.876 |

| F (27) |

|

|

| 374.9 | 58

|

| OWL Multimedia | 3.963 |

| F (36) |

|

|

| 469.2 | 49

|

| OneLLama | 11.777 |

| F (40) |

|

|

| 341.4 | 59

|

| Pandora | 5.732 |

| D (63) |

|

|

| 957.5 | 17

|

| QLoud | 1.336 |

| F (52) |

|

|

| 206.7 | 38

|

| Radio Paradise | 2.445 |

| F (55) |

|

|

| 254 | 60

|

| Rate Your Music | 7.951 |

| C (71) |

|

|

| 634.9 | 71

|

| Rhapsody | 7.183 |

| F (31) |

|

|

| 782.1 | 121

|

| Ruckus | 34.039 |

| F (30) |

|

|

| 766.2 | 73

|

| Shoutcast | 2.452 |

| D (61) |

|

|

| 96.4 | 22

|

| Shazam | 10.442 |

| F (56) |

|

|

| 507.8 | 46

|

| Slacker | 16.803 |

| F (48) |

|

|

| 373.5 | 25

|

| Snapp Radio | 11.747 |

| C (78) |

|

|

| 38.6 | 8

|

| Snapp Radio2 | .986 |

| B (85) |

|

|

| 68.2 | 5

|

| Songbird | 5.636 |

| D (61) |

|

|

| 621.2 | 37

|

| Soundflavor | 7.879 |

| F (44) |

|

|

| 559.1 | 60

|

| Spiral Frog | 3.535 |

| F (46) |

|

|

| 407.6 | 90

|

| The Filter | 8.229 |

| F (37) |

|

|

| 710.3 | 61

|

| The Hype Machine | 6.395 |

| F (48) |

|

|

| 159.2 | 32

|

| Hype Machine(beta) | 3.92 |

| F (56) |

|

|

| 233.2 | 49

|

| Tune core | 5.945 |

| F (47) |

|

|

| 222.3 | 56

|

| Yahoo Music | 5.211 |

| F (55) |

|

|

| 481.6 | 35

|

| Youtube.com | 4.955 |

| D (65) |

|

|

| 204.2 | 65

|

| YottaMusic | 2.127 |

| C (76) |

|

|

| 51.3 | 42

|

| ZuKool Music | 4.046 |

| D (67) |

|

|

| 244.3 | 20 |

I've

also included SnappRadio (a mashup that I wrote a while back) along

with a rewrite (snappradio2) that uses the Google web toolkit. One of

the primary goals of the GWT is to make sure that your web app performs

well. This is evident, as the load time for my app went from a laggy 12

seconds to a snappy one second.

Looking at this data, it looks

like just about all of the music 2.0 sites could cut their page load

times in half with just a few simple techniques. Combining

javascript code into a single file, adding far-future expiration headers

to javascript and images - take little time to implement but can have

surprisingly large positive impact on performance.

I highly recommend the book along with the Yahoo's exceptional performance team's website. Both are filled with techniques and tools for improving web site performamnce.

Yesterday, I sat on a panel at the Pop and Policy summit in Montreal.

The topic was music recommendation. I was joined by Brian Whitman

(founder of Echo Nest), Doug Eck (Machine Learning and music at

the University of Montreal), Diane Sammer (CEO of Goombah), and Sandy

Pearlman (MoodLogic, producer of The Clash, Blue Oyster

Cult). The panel was moderated by journalist Karla Starr (who

wrote an excellent piece on

Yesterday, I sat on a panel at the Pop and Policy summit in Montreal.

The topic was music recommendation. I was joined by Brian Whitman

(founder of Echo Nest), Doug Eck (Machine Learning and music at

the University of Montreal), Diane Sammer (CEO of Goombah), and Sandy

Pearlman (MoodLogic, producer of The Clash, Blue Oyster

Cult). The panel was moderated by journalist Karla Starr (who

wrote an excellent piece on