The Racist Recommender

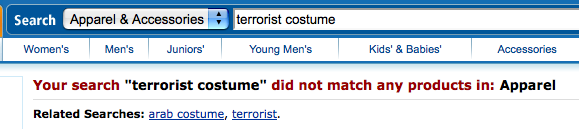

Of course, this isn't really the view of Amazon. They don't equate terrorists and Arabs. It just that they have an algorithm that looks for statistical patterns in searches that they use to suggest alternate searches. This algorithm notices that the same people who search for 'terrorist costume' will often search for 'arab costume'. This related search that seems to equate terrorists with Arabs is just a reflection of society's prejudices.

This isn't the first time we've seen a racist recommender. Wal*Mart got into a little bit of trouble when their recommender started to associate films about black historic figures like MLK with the movie Planet of the Apes. Since automatic recommenders are just reflections of the biases of the population at large, the recommenders become a mirror of our society. If the recommender is giving racist recommendations, it is likely that the racism exists in the population at large.