Social Tags (such as the tags applied at sites such as Last.fm or Qloud) are particularly useful for building recommender systems that can create transparent/explainable recommendations. Instead of saying "Other people who like the Beatles also like the Rolling Stones" like you would with a traditional collaborative filtering recommender, with a tag-based recommender you can offer more transparent explanations such as: "we are recommending Deerhoof because you seem to like quirky noise pop with female vocalists and mad drumming." This transparency in recommendations can make tag-based recommendations superior to traditional collaborative filtering recommenders. However, the social tags suffer from a sparsity problem. Not all music is equally tagged. Popular artists such as the Beatles and U2 have acquired lots of tags, less popular artists have few tags while new, unknown artists have no tags at all. Using social tags for recommendations can give excellent, transparent recommendations for established artists, but they cannot offer recommendations for these untagged new or unknown artists.

To address this tag-sparsity problem

we are building a system that will be able to automatically apply social

tags to new music using only the audio. We use signal

processing and machine learning to predict what social tags people would

apply to the music. This helps us avoids the 'cold-start' problem

typical in recommender systems that rely on the wisdom of the

crowds. More details about this system are in this paper.

This work has been a collaboration between Sun Labs and Professor Doug Eck from the University of Montreal and his extremely smart student Thierry Bertin-Maihieux. The combination of Doug and Thierry's hardcore signal processing and machine learning skills with my blogging skills makes this an excellent, fruitful collaboration.

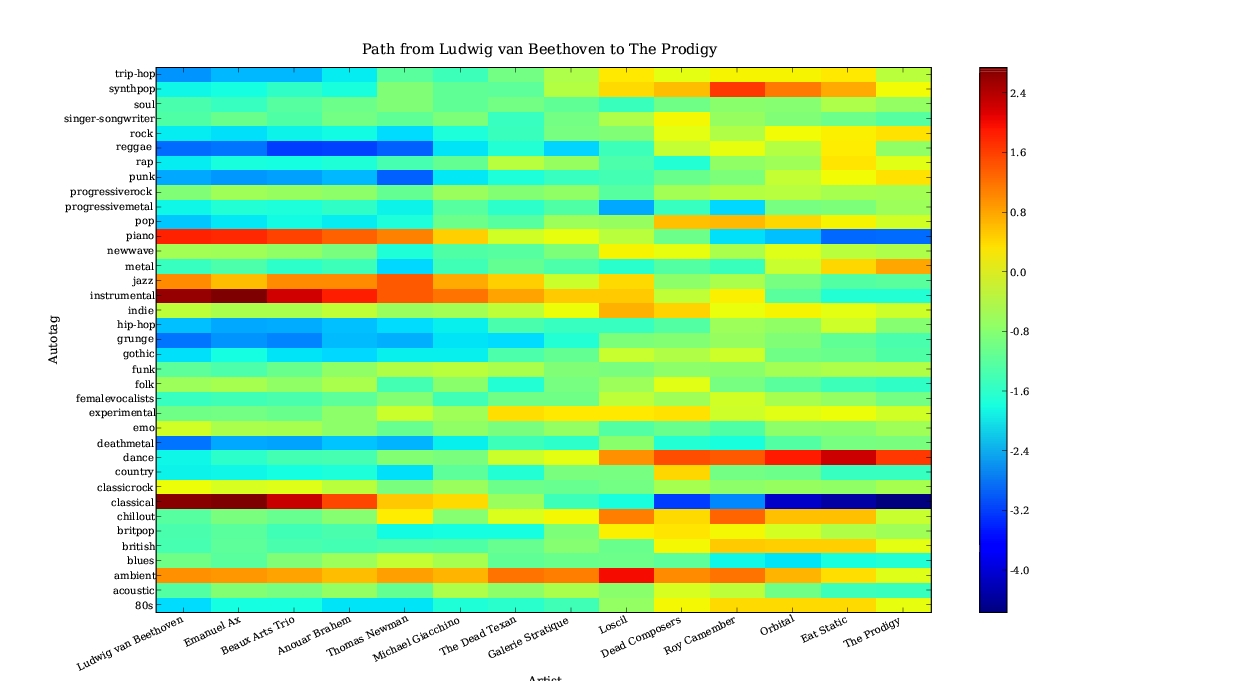

Doug has created a few examples of what it sounds like to navigate the autotagging space. Thierry applied a dimensionality reduction technique called Isomap to create a nearest neighbor graph of artists that we use to find the shortest path joining two artists. Doug sampled 5-second segments from songs by these artists and chained them together in an Mp3 file. Here's one example:

From Beethoven to The Prodigy. [ mp3 ].

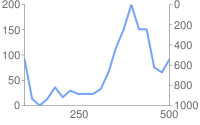

This graph shows the autotags that have been applied to a number of artists. Hotter colors indicate a strong weighted tag. For instance, Beethoven is strongly tagged 'classical', 'instrumental' and 'piano' and weakly tagged 'deathmetal' and 'reggae'. While The Prodigy is strongly tagged 'dance' with a bit of 'metal' thrown in, while being extremely adverse to 'classical' and 'piano'. The graph shows how we can get from Beethoven with its strong 'classical' and 'piano' tags to The Prodigy with its strong 'Dance' and weak 'classical' tags in a dozen steps, while minimizing iPod whiplash. It's a neat audio and visual representation of our work.

Doug has a some other examples of these tag space navigations, including Coltrane to System of a Down, and Mozart to Nirvana. It's cool stuff worth checking out.