The details are here: SpecialTagatuneEvaluation

Friday Dec 12, 2008

Thursday Dec 11, 2008

Tuesday Dec 09, 2008

Here's an interesting reddit thread on tools for tagging your personal music collection: Anybody knows a media player allowing del.icio.us-like tagging of tracks? Some folks want the tags added directly to the ID3-V2 in the MP3 so the tags are not tied to any particular tool or DB.

Here's an interesting reddit thread on tools for tagging your personal music collection: Anybody knows a media player allowing del.icio.us-like tagging of tracks? Some folks want the tags added directly to the ID3-V2 in the MP3 so the tags are not tied to any particular tool or DB.

Thursday Dec 04, 2008

Wednesday Dec 03, 2008

Tuesday Dec 02, 2008

In this unusual visualization, artists Fernanda Viégas and Martin Wattenberg

have analyzed the lyrics of 10,000 songs looking for the words that

describe body parts and have plotted the results as a function of 11

music genres. The resulting chart highlights the differences in lyric

content between the various genres. It's an interesting graphic (but

be warned, some parts of the graph - especially the Hip Hop genre - are

not safe for work).

In this unusual visualization, artists Fernanda Viégas and Martin Wattenberg

have analyzed the lyrics of 10,000 songs looking for the words that

describe body parts and have plotted the results as a function of 11

music genres. The resulting chart highlights the differences in lyric

content between the various genres. It's an interesting graphic (but

be warned, some parts of the graph - especially the Hip Hop genre - are

not safe for work).

Monday Dec 01, 2008

Hey all - I need some help. I'm giving a short talk this week to some

young folk (ages 15 to 20) - one point I'd like to make is how music

discovery is shifting away from traditional recommendation ('people who

like X also like Y') and toward things like guitar hero and rock band.

Since the audience is the squarely in the guitar hero demographic I

thought I could make the point quite easily by playing a few 'guitar

hero' greatest hits. In particular I'm looking for older songs by bands

that teenagers would probably never have otherwise heard if not for

guitar hero.

Hey all - I need some help. I'm giving a short talk this week to some

young folk (ages 15 to 20) - one point I'd like to make is how music

discovery is shifting away from traditional recommendation ('people who

like X also like Y') and toward things like guitar hero and rock band.

Since the audience is the squarely in the guitar hero demographic I

thought I could make the point quite easily by playing a few 'guitar

hero' greatest hits. In particular I'm looking for older songs by bands

that teenagers would probably never have otherwise heard if not for

guitar hero.

Even though I've played GH a bit, I'm not the right age to make the best call. So to be specific - what is the best example of guitar hero leading teens to music that they would never otherwise have listened to?

40 years ago this month, the White Album was released by The Beatles.

The White Album is perhaps my favorite from The Beatles - with classics

like "while My Guitar gently weeps" and "helter skelter" - whimsical

tracks such as "rocky raccoon" and "why don't we do it in the road" and

the head scratchers like "revolution #9" and "wild honey pie".

40 years ago this month, the White Album was released by The Beatles.

The White Album is perhaps my favorite from The Beatles - with classics

like "while My Guitar gently weeps" and "helter skelter" - whimsical

tracks such as "rocky raccoon" and "why don't we do it in the road" and

the head scratchers like "revolution #9" and "wild honey pie".

There's an interesting look back at the White Album on the All Songs Considered podcast: 'The White Album' 40 years later - with all sorts of interesting tidbits (Paul McCartney plays drums on 'Back in the U.S.S.R' after Ringo leaves the studio in a huff), recordings of practice sessions and songs that didn't make the cut. The podcast is worth a listen.

Tuesday Nov 25, 2008

After a long hiatus, Rocketsurgeon is back and is updating his comprehensive Music 2.0 directory. There's an RSS feed, so just add it to your feedreader and find out what's new in the Music 2.0 world. Hopefully, with his newly restored energy, Rocketsurgeon will update the wonderful Logos of Music 2.0 soon as well. There's been lots of changes in the music space since Spring of 2007.

Monday Nov 24, 2008

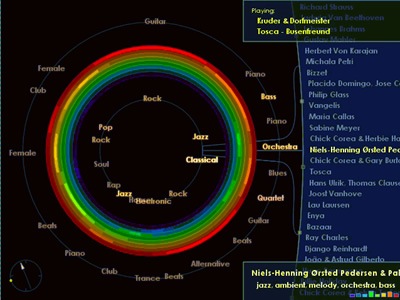

The interface is very simple - just type in the name of an artist that

you like and the perceptron will give you a list of artists to try out -

you can listen to music by the artist, read their Wikipedia bio,

purchase tracks by the artist, add the artist to a playlist, etc. If

you sign up and login to the perceptron, it tracks your activity and

starts to adapt the recommendations based upon what you pay attention

to. One thing I really like is how the perceptron tells you where the

source of a recommendation comes from, like so:

This explanation is helpful in giving me a better understanding of why a particular recommendation was made.

maryrosecook explains the algorithm she uses in her blog. Included in her explanation is a table of weights that she's derived from user activity for the various sources of artist similarity data:

| Epitonic similar artists | 0.439 |

| Tiny Mix Tapes similar artists | 0.316 |

| Myspace top friends | 0.128 |

| Mixtapes | 0.075 |

| Record labels | 0.020 |

| Epitonic other artists | 0.016 |

| MP3 blogs | 0.003 |

No surprise that Epitonic similar artists have the highest weight, and is responsible for a good portion of the recommendations. I am a bit surprised that the MP3 blogs have such a low impact on the recommendations. maryrosecook does indicate that these weights were derived after only a rather small amount of usage (200 user ratings) so they may change quite a bit as the system is used more.

The recommendations from the perceptron are not always great. Sometimes you can see evidence of some recommendation spam (if I like the Beatles will I really like the Bloody Beetroots?). On the other hand, the recommendations from the perceptron are highly novel and diverse - very different from what you'd see at Last.fm, iTunes or Amazon.

In addition to the occasional bum recommendation, there are a few kinks - wikipedia bios go astray (I'm reading about cloud formations instead of the bio for the band 'Cirrus' right now). Wikipedia markup sometimes sneaks through the filters. The music collection is skewed toward the Myspace crowd, so us old folk who are looking for recommendations based upon Rick Wakeman or The Nice are up the creek without a recommendation. But despite these issues, I like the perceptron. It has an indie feel - something that was done more as a labor of love to help people find music rather than just another way to make money selling music. The recommendations are offbeat - but for me that means finding out about a whole lot of bands that I've never heard of. I hope maryrosecook keeps at this project - (well, at least until she gets hired by Last.fm). The perceptron is a great way to find new music. (Oh, and her blog is a pretty fun read too).

Thanks to Zac for the tip!

Sunday Nov 23, 2008

Clive does seem to to fall into a a common trap that assumes that computers must be seeing nuances and connections that humans can't:

-

Possibly the algorithms are finding connections so deep and subconscious

that customers themselves wouldn’t even recognize them. At one point,

Chabbert showed me a list of movies that his algorithm had discovered

share some ineffable similarity; it includes a historical movie, “Joan

of Arc,” a wrestling video, “W.W.E.: SummerSlam 2004,” the comedy “It

Had to Be You” and a version of Charles Dickens’s “Bleak House.” For the

life of me, I can’t figure out what possible connection they have, but

Chabbert assures me that this singular value decomposition scored 4

percent higher than Cinematch — so it must be doing something right. As

Volinsky surmised, “They’re able to tease out all of these things that

we would never, ever think of ourselves.” The machine may be

understanding something about us that we do not understand ourselves.

I was hoping to see Clive talk about the problems with the Netflix prize - how it over emphasizes the importance of relevance in recommendation at the expense of novelty and transparency. The teams involved in the Netflix prize spend all of their time trying to predict how many stars each of the many thousands of Netflix customers would apply to movies. This skews the recommendations away from novel and diverse recommendations.

Similarly, the Netflix prize pays no attention to helping people understand why something is being recommended. There are some good papers that show that recommenders that can explain why something is being recommended can improve a users trust in the recommender and its recommendations.

The short and accessible paper: Being accurate is not enough: how accuracy metrics have hurt recommender systems provides an excellent counterpoint to the approach taken by the Netflix prize. Some highlights from this paper:

- Item-Item similarity can bury the user in a 'similarity hole' of like items.

- Recommendations with higher diversity are preferred by users even when the lists perform worse on Netflix-prize style accuracy measures.

When people learn that I work with recommender systems, they will often ask me if I am working on the Netflix prize - I tell them no, I am not - because of two reasons - first, there are some people who are way smarter than me who are already working on this problem - and they will certainly get better results than I would ever be able to, and second, and perhaps more importantly, - I don't think it is a very relevant problem to solve - there are other aspects of recommendation: novelty, diversity, transparency steerability, coverage, and trust that are as important - and a good recommender can't just optimize one aspect, it has to look at all of these aspects.

Saturday Nov 22, 2008

Yes.com

is an aggregator of information about terrestrial radio. They track

the song playing activity of many thousands of radio stations. Now they

are making all of this wonderful data available via a web API.

Yes.com

is an aggregator of information about terrestrial radio. They track

the song playing activity of many thousands of radio stations. Now they

are making all of this wonderful data available via a web API.

You can do all sorts of things with the API - you can search for stations (by call letter, by location, by style of music etc.), find out what a particular station is playing, find out which artists or tracks are popular etc.

For instance, to find the stations near me I can just search using my zipcode:

http://api.yes.com/1/stations?loc=03064

This yields a bunch of json (JavaScript Object Notation) that describes the local radio stations.

The API lets you get all sorts of interesting data, including:

- The log of all songs played on any station in the last week

- The top songs on any station (based on plays or user voting)

- Overall popularity of a song or artist

- Related songs (based upon playlist co-occurrence)

Some more examples:

- Top tracks on local radio station WBCN

- All the songs played yesterday on WBCN.

- Info about 'stairway to heaven'.

- Songs 'related' to 'stairway to heaven' (there's more Eddie Money on this list than I would have expected).

The API is full featured, is very fast and seems quite solid. My biggest gripe is that it serves up JSON and not XML (which is what just about every other music api serves), so it means incorporating another parser in my code. The folks at YES are thinking about making an XML version, so even this gripe may be short-lived.

The API terms of use are quite reasonable - if you use their data on a web site, you must link back to YES.com. Commercial use is "available and often immediately granted by just ensuring proper linking."

This API has lots of data that hasn't been easily available before - data that can be used to enable music discovery, playlist generation, trend spotting. Well done to the Yes.com developers for providing such a clean and easy to use API - and well done to the Yes.com business folk who realize the value in making this data available. (And, by the way, the Yes.com site itself is pretty cool).

This blog copyright 2010 by plamere