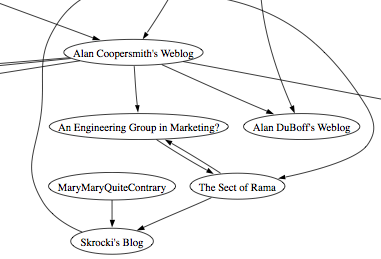

(60 node PDF version)

(200 node PDF version)

Update: Henry Story points out some obvious, missing links - turns out we haven't crawled all of blogs.sun.com yet, so if your well connected blog is not in the map, it will be soon.

Skip to content, navigation.

(60 node PDF version)

(200 node PDF version)

Update: Henry Story points out some obvious, missing links - turns out we haven't crawled all of blogs.sun.com yet, so if your well connected blog is not in the map, it will be soon.

/** adds a string to the persistent set */

public void add(String s) {

if (!contains(s)) {

inMemoryCache.add(s);

if (inMemoryCache.size() > MAX_MEMORY_CACHE_SIZE) {

SimpleIndexer indexer = searchEngine.getSimpleIndexer();

for (String cachedString : inMemoryCache) {

indexer.startDocument(cachedString);

indexer.endDocument();

}

indexer.finish();

inMemoryCache.clear();

}

}

/** tests to see if in item is in the set */

public boolean contains(String s) {

if (inMemoryCache.contains(s)) {

return true;

} else {

return searchEngine.isIndexed(s);

}

}

The code was surprising fast too. With a million items in the set, a

'contains' operation took a couple of hundred microseconds to execute.

So, this is one way you can abuse a search engine, (the other way is to make it crawl ;)

Update: Steve has blogged about the rest of the story.

Solar, with lyrics. from flight404 on Vimeo.

(Via the aardvark blog recommender)

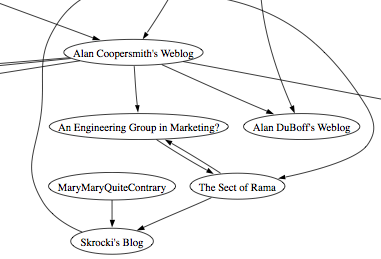

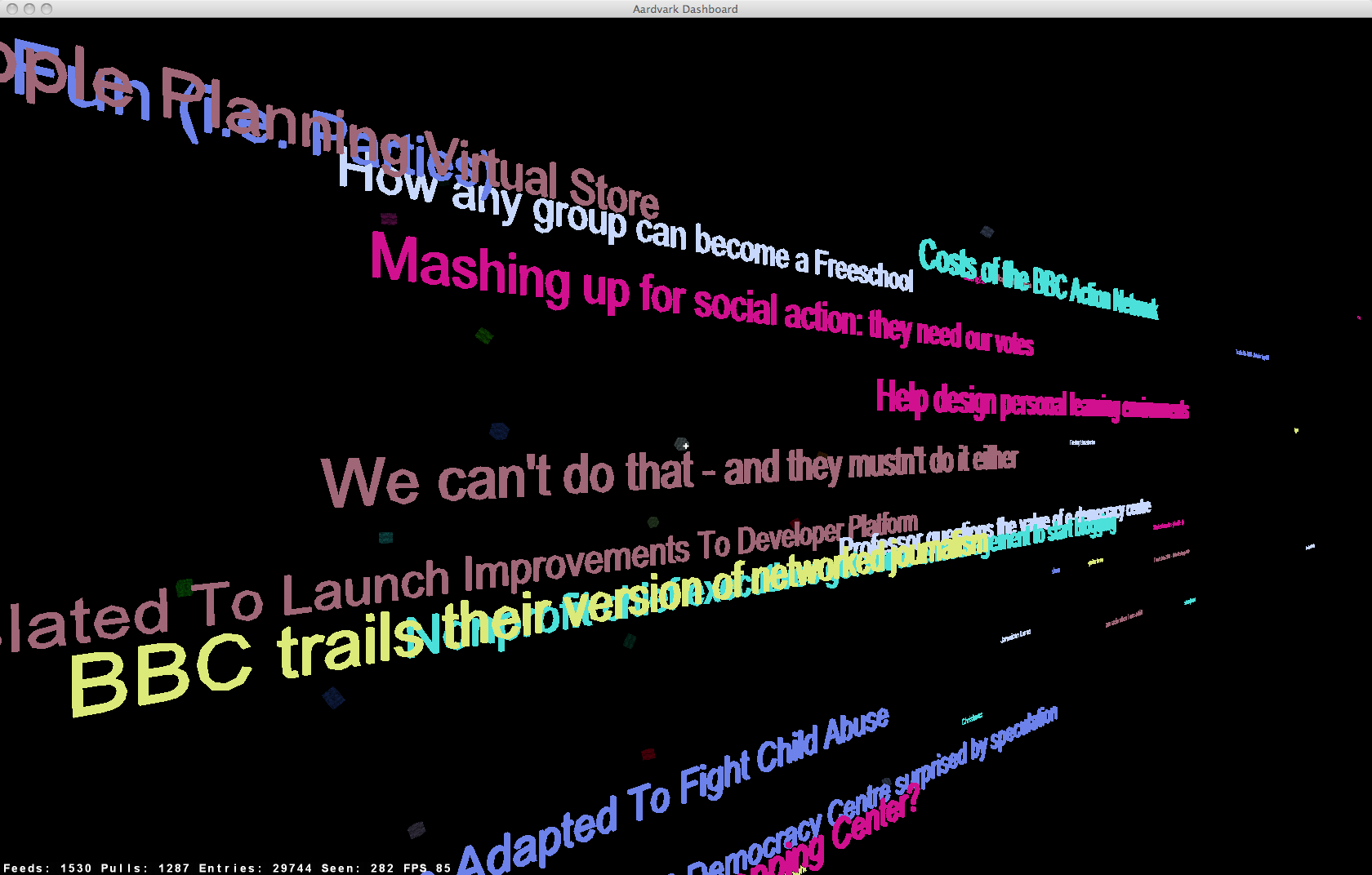

To demonstrate some of the under-the-hood technologies of the recommender (document similarity, classification and autotagging), and to add a bit of sizzle to what can be a bit of a boring demo ("now let me show you 10 blog posts that you might (yawn) find interesting"), we've built a dashboard for Aardvark. The dashboard is a 3D app that shows the status of the live running system. Blog headlines scroll through the space as they are crawled from the web.

Clicking on any headline brings up the story. We can look at the manual tags and autotags for the story, find similar stories, get recommendations and explore the topic space of the blogosphere through this interface. Of course, this isn't the type of interface that anyone would use to read blogs on a daily basis, but it is a great way to show off what is going on behind the scenes in the recommender.

If you are interested in learning more about Project Aura, and Aardvark, and if you'd like to see the dashboard in action, be sure to come to our session at JavaOne. The session is TS-5841: Project Aura: Recommendation for the Rest of Us (Next Generation Web / Cool Stuff), and is at 12:10 PM on Tuesday, May 6.

Via Spoitfy Blog

Update: Well, it looks like the Beatles are completely gone now. The Beatles are now MIA from all my playslist too. Sigh.Steve promises to start posting regularly about the engine, so check out the Search Guy blog: The Search Guy

I'm not in any position to participate since I don't meet the eligibility requirements (individuals or sole proprietors and privately held businesses), but if I did, I'd be a little cautious about responding to this call, since my cool recommender idea would be published in a public forum - where anyone could see it and copy it. On the other hand, if I had a good idea, that was hard to duplicate or had some secret sauce - this might be just the thing to give me a year or two to turn it into the next Google.

Via MyStrands Blog » Looking for the best early-stage recommender start-ups

There's no way you will enjoy this song.

The most unwanted music is over 25 minutes long, veers wildly between loud, quiet, fast and slow. The orchestra features accordion, bagpipe and an operatic soprano singing atonal music, advertising jingles, political slogans, and "elevator" music, while a children's choir sings jingles, holiday and cowboy songs. The song is a masterpiece in modern horror. Read more about this project.

Via Mike Love’s blog

CBS Interactive's Quincy Smith On Re-Org: 'More CBS Corp. Firepower Focused On Interactive' - washingtonpost.com: "Last.fm: It's almost a year since CBS plunked down $280 million for the UK music recommendation start-up and Smith knows he needs to deliver on all that promise. Last.fm has been growing rapidly on its own and has been given start-up like breathing room but it's time to bring it more into the fold. As we've written here before, Last.fm also needs more U.S. traction. Yes, Smith said, they bought Last.fm in part because of its strong international appeal. 'But it's our job, frankly, as a corporation, to make them more valid in the United States.' To that end, CBS will ramp up on-air promos with 'a lot more call outs' starting in May and, very soon, Last.fm will get its own CMO 'charged with plugging them more into the CBS mainstream.' In late May, CBS will start sending viewers of certain shows to the show's music on Last.fm. 'The downside of Last.fm right now, from my perspective, has nothing to do with the service itself ... the question is how do you blow it up and make it a major media brand in the United State?' Last.fm is working with CBS Interactive's Entertainment unit. As Smith put it, 'CBS has done much less of a job with Last.fm than Last.fm has done with Last.fm.'"

No doubt going mainstream with Last.fm is necessary for CBS to justify their $280 million dollar investment - but for a site like Last.fm, going mainstream is really tricky. Last.fm listeners tend to be highly engaged music fans - whose taste runs to less mainstream music - you are more apt to find artists like Radiohead, Arcade Fire and the Postal Service in the top ten at Last.fm compared to the mainstream pop artists like Mariah Carey and Miley Cyrus that populate the Billboard Pop 100. As Last.fm goes mainstream, there is going to be a culture clash as the mainstream listeners collide with the old-school last.fm listeners. Teenyboppers (and their angry parents) will wonder why Hannah Montana is tagged with 'hardcore death metal' and 'lesbian'. Old-school last.fm listeners will leave for alternative sites as last.fm loses its focus as the best site to discover new indie and alternative music. It's Digg all over again. As Digg went mainstream, it lost its focus on technology - and all the hard-core tech readers went back to slashdot. The problem for Last.fm is that as it goes mainstream, it risks losing much of its core audience - the highly engaged music fan - and this user base of hard core music fans is one of Last.fm's biggest asset.Companies like Google have spent years fighting search engine optimizers that will try to inflate the search rankings in exchange for money. The next wave of this foolishness is upon us - recommender engine optimizers. Companies will take your money in exchange for inflating the playcounts for your tracks. For $747 a company called Tune Boom will increase the playcounts on your MySpace tracks by 300,000. The tuneboom pro site just reeks of sliminess:

More Plays Get You Attention. It's the Number One Factor To Get On The MySpace Charts! While Having Lots Of Friends On MySpace Is Important - It's Only Useful If You Have Targeted a REAL Fan Base - It's Proven That the Very First Information Looked At Are Your Song Plays! It ALL Starts With The Plays! *Important: Don't let fans come to your page and see 20, 30, or 50, plays! People like to be a part of something big and don't want to miss out. When they see a high song play count and see that your song plays are increasing, they will be more likely to click and listen to your music and become friends and fans.Via TuneBoom Pro Apparently Inflates Artists' MySpace Plays | Listening Post from Wired.com

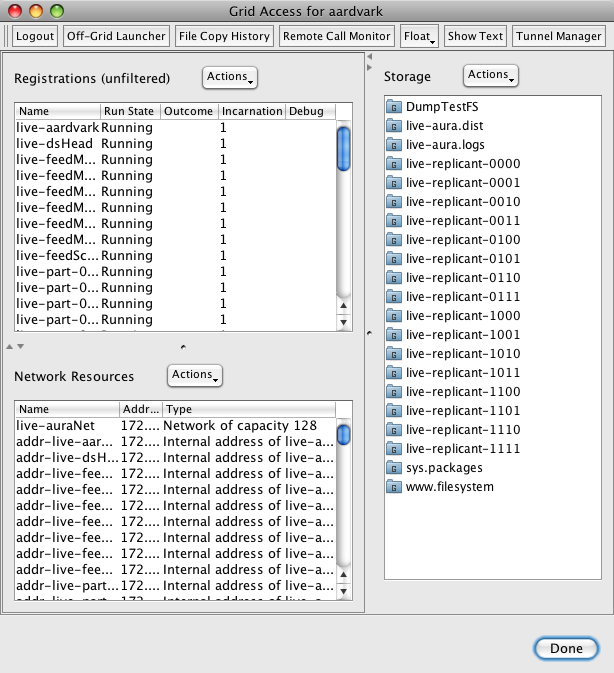

Building a system that is ready to collect so much data and process it can be a challenge. It is certainly a challenge for Project Aura. We want to build a system from the ground up that is ready for all of this data. Building such a web scale system that is highly reliable, fault tolerant and allows us to easily expand our computing capacity without re-architecting the system is not easy. But luckily we have a secret weapon that makes it easy (well, easier) to build a web-scale recommender. That secret weapon is Project Caroline.

Project Caroline is a research program developing a horizontally scalable platform for the development and deployment of Internet services. The platform comprises a programmatically configurable pool of virtualized compute, storage, and networking resources. With Project Caroline we can develop services rapidly, deploy frequently, and automatically expand or contract our use of platform resources to match changing runtime demands. In some ways, it is like Amazon's EC2 - in that allows for elastic computing across a number of networked computers, but Project Caroline works at a higher level - instead of dealing with linux images - you work with grid resources such as file systems, databases and virtualized containers for processes. It is really quite easy and flexible to use. Rich Zippel describes Project Caroline in his blog as: a really cool platform that allows you to programmatically control all of the infrastructure resources you might need in building a horizontally scaled system. You can allocate and configure databases, file systems, private networks (VLAN's), load balancers, and a lot more, all dynamically, which makes it easy to flex the resources your application uses up and down as required."

Project Aura consists of a set of loosely coupled components that use Jini for service discovery and RMI for IPC. The heart of the system is a distributed datastore that allows us to spread our taste data and the computation associated with the data over a number of compute resources. Feeding this datastore are a set of webcrawlers and on top of this sea of components we have a set of web services and web apps for communicating with the outside world. Getting this system to run on a local set of computers in the lab was a daunting task - with all the typical troubles of custom startup scripts, missing environment variables, processes registering with the wrong RMI registry, etc. All the typical things that can go wrong when trying to get a lots of computers working together to solve a single problem. Based upon this, I really thought we were going to have lots of problems getting this all running on Project Caroline - but instead it was a straightforward, process. In about a day, Jeff was able to get all of Project Aura running on Project Caroline.

I was worried that once we started to run on the Project Caroline grid, we would lose some of ability to interact with our running system. I was worried that we wouldn't be able to monitor our system, look at log files, restart individual components, or tweak a configuration - but that is not the case. Project Caroline has a grid accessor tool lets us take total control of the grid-based Project Aura. We can control processes, configure the network, interact with the filesystem (we can even use webdav to 'mount' the Project Aura filesystem on a local machine). Interacting with Project Aura when it is running on the grid is easier than when it is running locally. All the control is via a single interface - its very nice.

Now that we have Project Aura running on top of Project Caroline - I'm getting used to the idea of having 60 web crawling threads feeding a 16-way datastore that is being continually indexed by our search engine - and all of this is running across some number of processors - I don't really know how many, and I don't really care

I'm really excited about Project Caroline - this seems to be the right answer to the question that plagues anyone who is developing what they hope will become the next YouTube - How do you build and deploy a system that is going to scale up if and when you get really popular?

Brian Zisk and friends have already scheduled a followup to the very successful SanFran MusicTech Summit The summit will be on May 8th, right after the 50th anniversary of NARM (the National Record Merchandising Association). Note that this also happens to be right in the middle of JavaOne week, so if you are geeking out at one conference, you are just a cable car ride away from the other. I'm going to JavaOne, and that will no doubt keep me busy all week, but if I do get tired of hearing about yet another closures language extension for Java, I may try to sneak over to the summit. They've already signed up a good set of speakers for the summit, so it is sure to be an enriching experience.

Everyday I become a bigger fan of Spotify. It is just great to have a seemingly bottomless collection of music to chose from. By creating URLs to everything (tracks, artists, albums and playlists), Spotify is enabling sites like Listiply. By adopting standards like XSPF, Spotify playlists will play well with others. And Spotify is fast. It feels faster than my iTunes does, despite the fact that the music Spotify is serving is probably thousands of miles away, while iTunes is serving music and metadata right from my hard-drive. It is not hard to understand why people are going to extreme lengths to get a Spotify invite.

This blog copyright 2010 by plamere